- Supply Chain Sanctions: The Pentagon is moving to designate Anthropic as a "supply chain risk" due to the company's refusal to grant unrestricted military access to its AI model, Claude.

- Policy Deadlock: Negotiations over the renewal of the "Claude Gov" classified version have stalled as Anthropic seeks to prohibit use for autonomous weaponry and mass surveillance of American citizens.

- Mandatory Divestment: A formal risk designation would require all defense contractors and their suppliers to remove Claude from their internal workflows and document analysis systems.

- Venezuela Operation: Reports indicate that Claude was utilized through a Palantir partnership during a January military raid in Caracas, despite company terms prohibiting violent deployments.

- Operational Demands: Defense officials are demanding an "all lawful purposes" standard for AI usage, arguing that ethical guardrails create unworkable gray areas for battlefield commanders.

- Market Competition: Competitors including Google, OpenAI, and xAI have reportedly agreed to remove military guardrails on unclassified systems and are seeking access to classified networks.

- Technical Superiority: Claude Gov currently remains the only AI model operating on the military's classified networks, as competing models are regarded by some officials as less advanced for intelligence analysis.

- Economic Leverage: The Pentagon is using the supply chain designation as a tool to signal that domestic technology firms must prioritize military requirements over internal ethical frameworks to maintain government eligibility.

The Pentagon is preparing to designate Anthropic as a "supply chain risk" and sever business ties with the AI company over its refusal to allow unrestricted military use of Claude, Axios reported on Monday. Defense Secretary Pete Hegseth is "close" to making the decision after months of failed negotiations, a senior Pentagon official told the outlet. "It will be an enormous pain in the ass to disentangle, and we are going to make sure they pay a price for forcing our hand like this," the official said.

Supply chain risk designations are typically reserved for foreign adversaries and hostile actors, the kind of label slapped on Huawei routers or Russian software vendors. Not American companies. Applying it to Anthropic, widely regarded as one of the country's leading AI developers, would represent an extraordinary act of retaliation by the Pentagon against a domestic technology firm.

The Breakdown

- Pentagon preparing to label Anthropic a "supply chain risk," forcing all military contractors to drop Claude

- Dispute centers on mass surveillance and autonomous weapons; Pentagon demands "all lawful purposes" standard

- Claude reportedly used in Venezuela/Maduro raid via Palantir; Anthropic's terms prohibit violent deployment

- Google, OpenAI, and xAI agreed to remove military guardrails but none yet operate on classified networks

What the fight is about

Anthropic and the Pentagon have spent months in contentious negotiations over the renewal of Claude Gov, the classified version of Claude built specifically for the U.S. national security apparatus. Two issues are holding up the deal. Anthropic wants to ensure Claude isn't used for mass surveillance of American citizens or to develop weapons that fire without human involvement. The Pentagon is demanding the right to use Claude for "all lawful purposes," a standard it is also pressing on Google, OpenAI, and Elon Musk's xAI.

"The Department of War's relationship with Anthropic is being reviewed," chief Pentagon spokesperson Sean Parnell told The Hill on Monday. "Our nation requires that our partners be willing to help our warfighters win in any fight. Ultimately, this is about our troops and the safety of the American people."

The company's position is that current U.S. surveillance law was never written with AI in mind. The government already collects enormous quantities of personal data, from social media posts to concealed carry permits. AI could supercharge that authority in ways existing statutes don't contemplate. Think pattern-matching across millions of civilian records, cross-referencing behavior at a scale no human analyst corps could replicate. Anthropic has said it is prepared to loosen its current terms but wants explicit boundaries.

Pentagon officials counter that the restrictions are too broad and would create unworkable gray areas on the battlefield. Defense officials have argued that drawing bright lines around specific capabilities would hamper operations in scenarios nobody can fully predict yet, a concern that Anthropic's critics inside the Pentagon call naive at best and obstructionist at worst.

The relationship appears to have soured beyond the specifics of the contract. A source familiar with the negotiations told Axios that senior defense officials had been frustrated with Anthropic for some time and "embraced the opportunity to pick a public fight."

The Venezuela trigger

The Wall Street Journal reported last week that Claude was used during the U.S. military operation in January that captured Venezuelan President Nicolas Maduro from his residence in Caracas. The AI tool reached the battlefield through Anthropic's partnership with Palantir Technologies, which holds extensive military and intelligence contracts. That revelation turned a simmering contract dispute into something more volatile.

Venezuelan authorities said 83 people were killed in bombings across the capital during the raid. Anthropic's own terms of use prohibit Claude's deployment for violent purposes, weapons development, or surveillance. Claude Gov has capabilities ranging from processing classified documents and intelligence analysis to cybersecurity data interpretation, and it was unclear which of those functions the military called on during the operation.

Anthropic declined to comment on whether its technology played a role but said any use would need to comply with its usage policies. Palantir and the Pentagon declined to comment.

Reports of the raid prompted Anthropic to inquire about whether its technology had been involved, according to The Hill, though the company denied making any outreach to the Pentagon or Palantir about the incident. The question itself was enough to deepen the rift. Here was a company that had staked its brand on responsible development, now staring at reports that its technology may have supported a military operation that killed dozens of people. Nobody confirmed anything. Nobody denied it either. And somewhere in Arlington, the contract renewal was still sitting on a desk.

What supply chain risk actually means

If the Pentagon goes through with the designation, the consequences reach far beyond Anthropic's military contract. Every company that does business with the Pentagon would need to certify that it does not use Claude in its own workflows. That's the kind of compliance burden normally imposed over Chinese telecom equipment or adversary-state technology. Not a San Francisco AI startup.

Losing the military deal itself wouldn't cripple Anthropic. The two-year contract, announced last July, is worth up to $200 million, a fraction of the company's reported $14 billion in annual revenue. The real damage is the ripple.

Stay ahead of the curve

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Eight of the ten largest U.S. companies use Claude, according to Anthropic. The technology sits inside enterprise systems across finance, healthcare, legal services, and government, running on laptops in procurement offices and servers in contractor data centers. Defense contractors and their suppliers who rely on Claude for document analysis, coding assistance, or internal operations would all need to strip it out or prove they'd stopped. That's the "enormous pain in the ass" the Pentagon official was describing, and it lands on the companies that depend on Anthropic's tools, not just on Anthropic itself.

Then there's the market signal. If you're an enterprise buyer evaluating AI vendors, the designation forces a new question into the procurement conversation: does choosing Claude put future government contracts at risk? That kind of uncertainty doesn't hit revenue immediately. It shows up in renewal conversations, in procurement meetings where a general counsel raises a flag, in RFP language that quietly specifies "Pentagon-compatible AI providers." The Pentagon knows this. A $200 million contract is a bargaining chip. A supply chain designation is a weapon.

Competitors already in the room

Pentagon officials have made clear they have alternatives. Google, OpenAI, and xAI have all agreed to remove their guardrails for military use on unclassified systems, Axios reported. All three are negotiating access to classified military networks. Pentagon officials expressed confidence that the companies would accept the "all lawful purposes" standard.

But swapping out Anthropic would not be painless. A senior administration official acknowledged that competing models "are just behind" for specialized government applications. Claude Gov was purpose-built for handling classified materials, interpreting intelligence, and processing cybersecurity data. It remains the only AI model running on the military's classified networks. OpenAI has built a custom GenAI.mil tool for the Pentagon and other allied nations. Google already provides a customized version of Gemini for defense research. xAI, owned by Musk, signed a Pentagon agreement in January. None of them operate on classified systems yet.

Officials who have used Claude Gov rate it highly. No complaints about the tool's capability during the Maduro operation or anywhere else. The grievance is entirely about terms, not technology. Which makes the threatened divorce harder to read at face value: the Pentagon wants to banish the product it praises most.

Precedent matters as much as the switch itself. How Anthropic's negotiations resolve will shape the contract terms for every AI company seeking classified military work. OpenAI, Google, and xAI are watching. A source familiar with those discussions told Axios that "much is still undecided" despite the Pentagon's confidence, suggesting the three companies haven't fully committed to whatever the Pentagon is asking behind closed doors.

A loyalty test dressed as policy

Anthropic has built its identity around the idea that powerful AI requires guardrails. CEO Dario Amodei has called publicly for regulation to prevent catastrophic harms from AI. He has warned against autonomous lethal operations and mass surveillance on U.S. soil. The company raised billions in funding partly on the premise that responsible development and commercial success are compatible goals. Investors bought that vision. Enterprise customers bought it. The Pentagon, for a while, bought it too.

Hegseth tested that premise in January when he told reporters the Pentagon wouldn't "employ AI models that won't allow you to fight wars." That framing collapses Anthropic's specific objections, on surveillance law, on weapons autonomy, into something blunter: a refusal to cooperate. It turns a policy dispute into a question of allegiance. Other AI companies appear to have taken note. Google employees staged walkouts over a Pentagon drone contract called Project Maven back in 2018. That was a different era. Google is now negotiating classified network access for Gemini, no Anthropic-style restrictions attached.

Anthropic hasn't walked away from the table. A spokesperson told The Hill on Monday that Anthropic remained "committed" to supporting U.S. national security and described the ongoing conversations as "productive" and conducted "in good faith." The company pointed out it was the first frontier AI developer to put models on classified networks and the first to build customized models for national security customers.

Good faith has limited currency when the other side is telling reporters you'll "pay a price." Pentagon officials aren't characterizing this as a disagreement between reasonable parties hashing out contract language. They're treating Anthropic's ethical boundaries like a contamination risk, something to be flagged and cut out of the supply chain entirely.

Anthropic built the most advanced classified AI system the Pentagon has ever deployed. That same technology reportedly played a role in an active military raid. Now the company faces classification alongside hostile foreign suppliers, not because the technology failed, but because its maker wanted a say in how it gets used. The contamination the Pentagon identified isn't in the code. It's in the conditions.

Frequently Asked Questions

What is a supply chain risk designation?

A Pentagon classification that bars a company's products from the U.S. military supply chain. It's typically reserved for foreign adversaries like Chinese telecom firms. If applied to Anthropic, every defense contractor would need to certify it doesn't use Claude, potentially disrupting operations at companies across finance, healthcare, and government.

What is Claude Gov?

A specialized version of Anthropic's Claude AI built for the U.S. national security apparatus. It handles classified materials, interprets intelligence, and processes cybersecurity data. It is currently the only AI model running on the military's classified networks, giving Anthropic a unique position among AI companies serving the Pentagon.

How was Claude used in the Venezuela operation?

The Wall Street Journal reported that Claude was deployed during the January military operation that captured Venezuelan President Nicolas Maduro, accessed through Anthropic's partnership with Palantir Technologies. The specific role Claude played remains unclear. Anthropic declined to confirm involvement, and both Palantir and the Pentagon declined to comment.

What restrictions is Anthropic insisting on?

Anthropic wants contract language preventing Claude from being used for mass surveillance of American citizens or to develop weapons that fire without human involvement. The company argues current surveillance law doesn't account for AI's ability to cross-reference civilian data at massive scale. The Pentagon considers these restrictions too broad for military operations.

Are other AI companies willing to work with the Pentagon without restrictions?

Google, OpenAI, and xAI have agreed to remove guardrails for military use on unclassified systems and are negotiating classified network access. Pentagon officials say all three would accept an "all lawful purposes" standard. However, a senior administration official acknowledged competing models are "just behind" Claude Gov for specialized government applications.

[

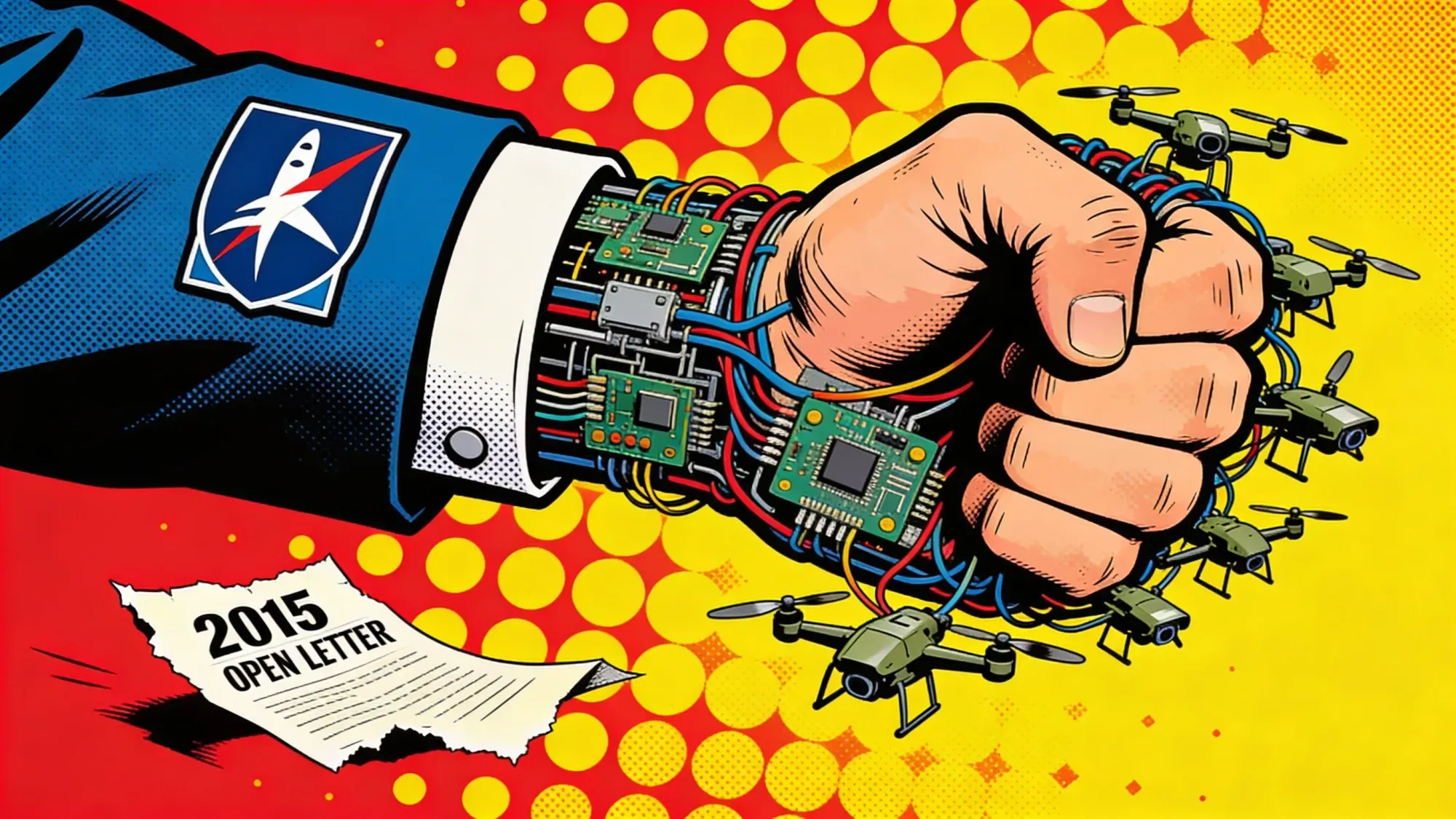

SpaceX and xAI Enter Secret $100M Pentagon Contest for Autonomous Drone Swarms

SpaceX and its subsidiary xAI are competing in a secret Pentagon contest to build voice-controlled autonomous drone swarming technology, Bloomberg reported Sunday. The $100 million prize challenge, la

The Implicator

[

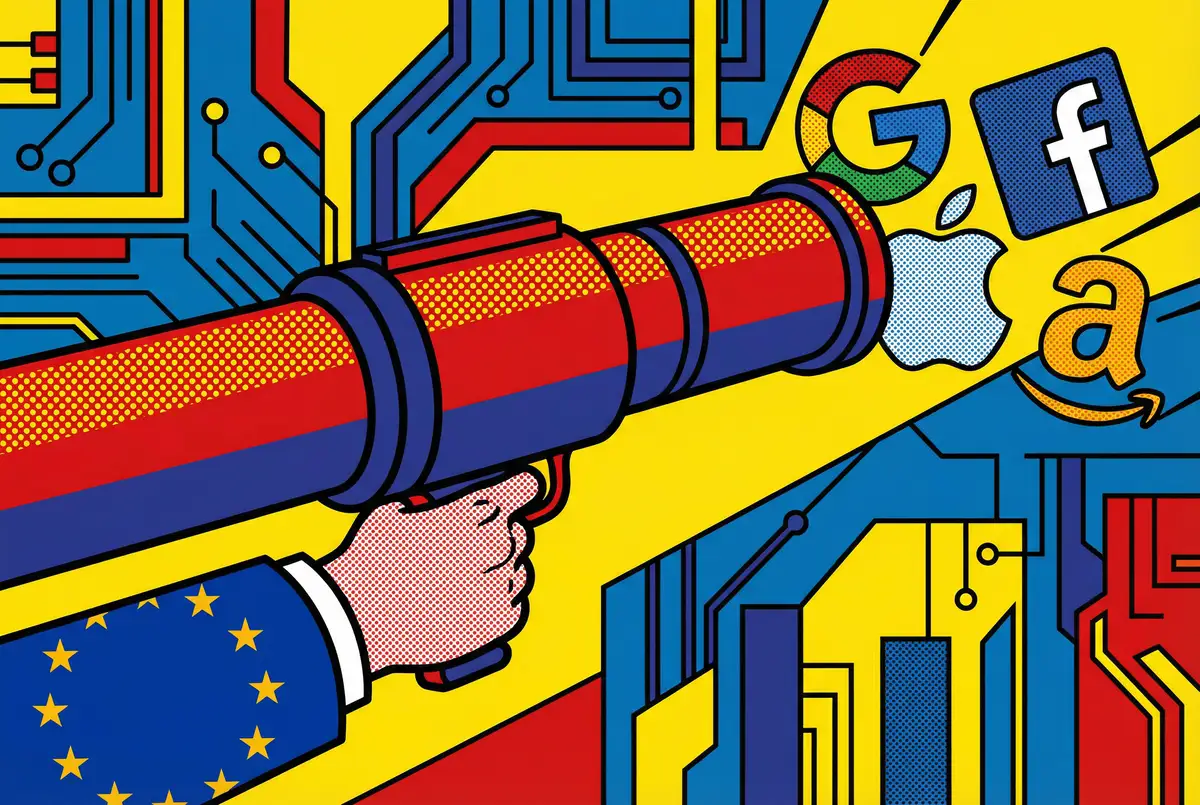

Europe's Trade Bazooka. Silicon Valley Holds Its Breath.

Thirty-six soldiers. That's what European nations sent to Greenland last week in a gesture of solidarity with Denmark. A training exercise, they called it. President Trump saw provocation. On Saturday

The Implicator

](https://www.implicator.ai/europes-trade-bazooka-silicon-valley-holds-its-breath/)

[

Europe Aims at Silicon Valley. The IMF Sees 2001.

San Francisco | January 20, 2026 Trump's Greenland tariffs just made the EU reach for its "economic nuclear weapon." The Anti-Coercion Instrument can revoke business licenses, ban government contract

The Implicator

![]()

](https://www.implicator.ai/europe-aims-at-silicon-valley-the-imf-sees-2001/)

Workers Are Afraid AI Will Take Their Jobs. They’re Missing the Bigger Danger.

Workers Are Afraid AI Will Take Their Jobs. They’re Missing the Bigger Danger. Technology Can Help People With Dementia Stay on the Job

Technology Can Help People With Dementia Stay on the Job People Trust Websites More When They Know a Human Is on Standby

People Trust Websites More When They Know a Human Is on Standby How WSJ Readers Use AI at Work

How WSJ Readers Use AI at Work Now That We Can Transcribe Work Meetings and Conversations, Should We?

Now That We Can Transcribe Work Meetings and Conversations, Should We? How Workplace Tech Has Changed Over the Years, From the WSJ Archives

How Workplace Tech Has Changed Over the Years, From the WSJ Archives Doctors and Hospitals Look to Drones to Deliver Drugs, Supplies—and Even Organs

Doctors and Hospitals Look to Drones to Deliver Drugs, Supplies—and Even Organs Office Technology Comes to the Movies: Test Your Knowledge With Our Quiz

Office Technology Comes to the Movies: Test Your Knowledge With Our Quiz